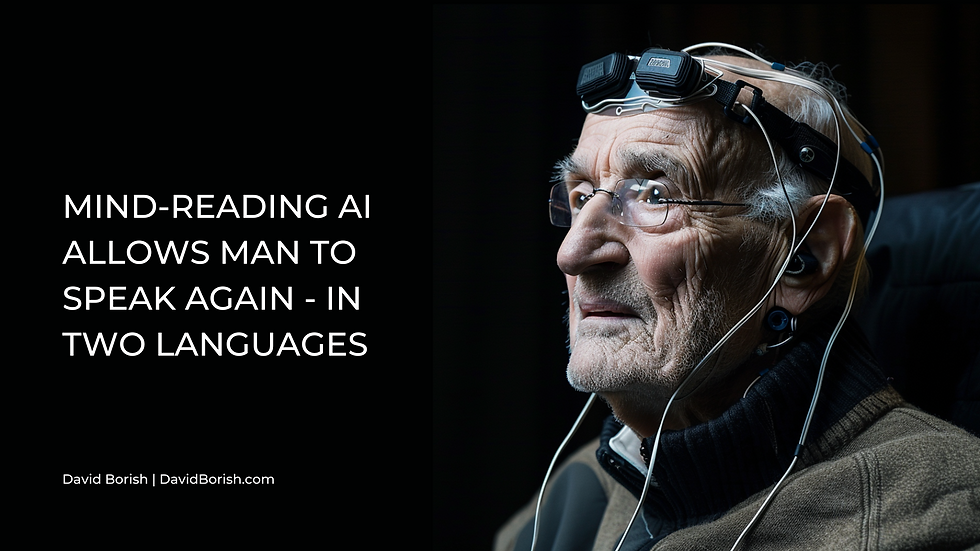

Mind-Reading AI Allows Man to Speak Again - In Two Languages

- David Borish

- May 30, 2024

- 2 min read

Scientists have developed the first brain-computer interface capable of decoding spoken words in two languages in real-time. The artificial intelligence-powered system, connected to a brain implant, allowed a man who is unable to speak coherently due to a stroke to have conversations in his native Spanish as well as English, which he learned later in life.

The breakthrough, published in Nature Biomedical Engineering, provides new insights into how the brain processes language. Researchers found that neural activity patterns for individual words were largely similar between Spanish and English, and that these patterns were consistent with those seen in people who grew up bilingual, even though the study participant learned his second language in his 30s after the stroke.

The technology works by first training the AI system on the distinct neural patterns generated when the participant tries to say specific words out loud. Then, as he attempts to speak, the system decodes the patterns in real-time, outputting the most probable words and sentences in both English and Spanish. In tests, the AI determined the correct first language with 88% accuracy and decoded the full sentence correctly 75% of the time.

Currently, this brain-computer interface requires the surgical implantation of electrodes on the brain's surface. However, as the technology advances, it could potentially work with less invasive methods like EEG or functional near-infrared spectroscopy (fNIRS) that measure neural activity from outside the skull. In the nearer term, more in-depth training data incorporating additional participants and languages could improve the system's versatility and decoding accuracy.

Looking ahead, this multilingual speech prosthesis technology, coupled with further developments in artificial intelligence language systems, could open up transformative possibilities. For example:

Restoring fluid communication for people with speech impairments in their preferred languages

Providing real-time cross-language speech-to-speech or speech-to-text translation for seamless multilingual conversations

Enabling vocalized language use and learning by decoding intended speech for people with conditions like apraxia

Combining the system with language models to assist with word finding or sentence completion

Integrating with augmentative and alternative communication (AAC) devices for a wider range of communication options

As we stand on the cusp of this remarkable breakthrough, it's clear that the development of multilingual brain-computer interfaces represents a significant leap forward in the field of neurotechnology. By harnessing the power of artificial intelligence to decode neural activity patterns associated with speech, we are opening up new avenues for restoring communication abilities in people with speech impairments. Moreover, the potential applications of this technology extend far beyond the medical realm, with the possibility of real-time language translation and seamless cross-cultural communication on the horizon.

If you or your organization would like to explore how AI can enhance productivity, please visit my website at DavidBorish.com. You can also schedule a free 15-minute call by clicking here

Comments